“To test or not to to test-that is the question.” These words were made famous in William Shakespeare’s Hamlet, and they still ring true today. Okay, maybe not these exact words, but you get the idea. I’ve been in the marketing world for many years (we’ll leave it as “many”), and the question still remains. Should I begin email testing or not?

The reality is that everyone knows they should test, but sometimes it feels too overwhelming. Where do I begin? What should I test? How do I test? Luckily, testing emails is not nearly as daunting as it may sound. Similar to Oracle’s approach to marketing automation, you have to crawl before you can walk before you can run. Once you understand the basics, start with a few small tests. From there, the sky is the limit.

Why should I bother testing?

Before diving into testing 101, let’s address the biggest question in the room: Why should I test in the first place? Admittedly, testing emails can be time-consuming and may use up already limited resources. Is it really worth it?

YES! Overwhelmingly, yes! Why? For many reasons, but if I only express one, test to increase conversion. A simple test can significantly impact your final results. Here’s the deal…as marketers, we sometimes think we know how customers will react to a layout, piece of content, or call to action. But in reality, we may be way off. That’s where testing comes in. I’ve conducted many tests in which the winner was shocking. I never thought a particular version would win, but it drew much higher conversion rates than the preferred version.

Goodbye, ego. Hello, higher engagement.

What do I test? Where do I test it?

What to test is not as clearly answered. Technically, you can (and should) test everything, including elements on your website, digital media, and email communications. Naturally, I’m hyper-focused on email marketing communications, but keep an open mind when you’re exploring options. Once you get into testing, you’ll never want to stop. It’s addictive.

However, you have to start somewhere. So, if we start with email marketing, what should you test? The answer, like everything else, depends on what you want to improve. Take a long hard look at your current metrics. Pretend for a moment that you want to test a particular email newsletter. First, run some analyses on your engagement metrics. Are you looking to increase open rates, click rates, or both? If your open rates are low, consider testing your subject line or pre-header text. If your click rates are low, perhaps you test your call to action, content, or offer.

With that said, there are many elements of an email that are worth testing. Below is a short list that I am sure you can elongate.

- Call to action – placement, wording, color, size, offer

- Subject line

- From/reply to email addresses

- Email layout – single column or two, placement of messages and images

- Personalization

- Body text

- Headline

- Pre-header

- Closing text

- Images – location, size, type

- Content and offers

- Timing – cadence, time of day, day of week, different time zones

The list goes on and on. In short, open your mind to testing anything and everything about your email communications, whether it’s related to layout, content, or actions. Test major items as well as those you consider minor. You may be surprised to find that the little things really do matter. As they say, the devil is in the details.

Although there are no limits to the types of elements you can test, here are some simple rules you should keep in mind when building your testing plans.

- The golden rule of A/B testing is to set up your test so you can select a winner. Define the result metric(s) that you plan to analyze in order to determine which variable or version worked best.

- Make sure to test only one variable at a time. Otherwise, it will be impossible to know which change ultimately impacted the results. You can certainly utilize multivariate testing when complex experience changes are required, but with A/B testing, simple is best.

- Run your tests simultaneously to ensure your results are not skewed by time-based factors. Take time zone into consideration if sending to to a diverse geography.

- Use an objective list. Split your lists randomly across regions, units, and groups. Test as large a sample as possible for more accurate results. Typically, I recommend at least 10% of your target audience.

- Listen to the empirical data collected. You’re testing for a reason, right? Remove your gut instinct as a consideration for a decision, and let the data guide you.

Side note, an expert from Oracle Maxymiser (a website testing and optimization tool), stopped by our blog recently to explain the important factors in testing website experiences. Some of the rules provided can be applied to emails as well! Check it out – A Lesson in Testing: What Makes A Good Test?

How do I test?

Most marketing automation platforms provide a way for you to run A/B testing in a simple way and Oracle Eloqua is no different. There are multiple ways to run an A/B test, depending on the level of complexity needed.

Simple Email Campaign

Within the Simple Email campaign builder, running an A/B test could not be easier. When configuring a campaign, simply select A/B Test as the type. Then select your Segment (the group of individuals that will receive the campaign). The Segment should include your test group as well as contacts that will receive the winning email.

Next, choose the two email versions you want to test, A and B. Once selected, choose the percentage of your segment that should receive the test. If you select 10%, Oracle Eloqua will automatically take 10% of your overall segment, split it 50/50, and send half of the test audience version A and the other half version B.

Lastly, decide on the winning metric (unique opens, unique clicks, etc.) as well as the duration or end date of your test. Click “automatically send the winning version” and ELQ will run your test, analyze the results, and send the winning version to the remainder of your segment.

Campaign Canvas

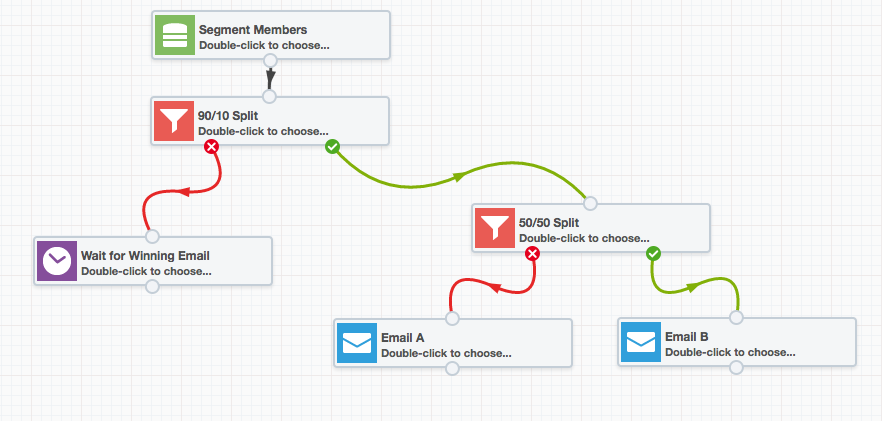

Another way to run an A/B test is directly on the Campaign Canvas. Using a series of shared filters, you can split your segment 90/10, holding 90% of your list for the winning email while the other 10% receives the tests. You can further split your 10% segment so that half receives email A while the other half receives email B. An example of the campaign logic is shown below.

For the 90/10 split, set your filter criteria to pull contacts with an Eloqua Contact ID that matches wildcard pattern “*1?”. This will pull (roughly) 10% of your list. It finds any contact having “1” as the second to last digit in their ID. “1” can technically be substituted for any number 0-9.

For the 50/50 split, set your criteria to look for any contact with an Eloqua Contact ID that ends in an odd (or even) number. For example, pull any contact with an Eloqua Contact ID that ends in 0, 2, 4, 6, or 8. This will ensure you pull 50% of the entire group.

Once the campaign runs for your allotted time, review the metrics for each version of your test emails. Whichever email performs best against your winning criteria should be sent to the remaining 90% of your list. This 90% is being held in the “Waiting for Winning Email” step. Simply de-activate the campaign, add the winning email to the campaign, and re-activate.

A couple important notes:

1. Be sure to set the “Waiting for Winning Email” wait step to be long enough to let the test run in its entirety.

2. You can set up the campaign to tell you which email won without looking at the reporting metrics.You can add an “Opened Email?” or “Clicked Email?” decision after both Email A and B to properly funnel contacts into a short Wait Step. This will allow you to visually see the performance of each email directly within the canvas.

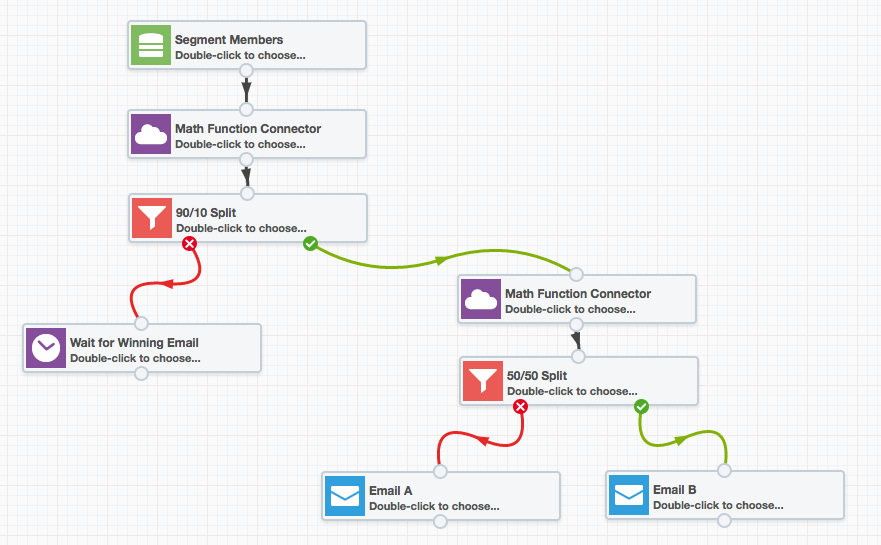

Campaign Canvas Using Statistical Randomness

In the majority of situations, one of the aforementioned processes will work. However, there are times when truly random A/B testing (actual statistical randomness) is required. In this case, you can use the Math Function (Contact) Connector to provide a random number to split your list.

To make this work, you will need to add a Contact field for “Random” or “Randomized.” You will also need to install the Math Function connector (explained quite well here: https://community.oracle.com/community/topliners/do-it/blog/2012/11/16/installing-and-using-the-math-functions-cloud-app). With each use of the Math Function connector, the “Random” contact field will receive a randomized number (double value) between 0 and 1. You then set your filter criteria to look for contacts with “Random” contact numbers equal or less than .1 (for 10%) and contacts with numbers equal or less than .5 (for 50%). Here’s an example of the campaign canvas logic with the use of the Math Function connector.

In Conclusion

No matter how you test, what you test, or why you test, hopefully it is clear that everyone, including you, should test! Even the most savvy marketers don’t always get it right. Sometimes we are surprised by which layout, call to action, or subject line drives the greatest engagement. I encourage you to review your content calendars, and to devise a testing plan. Start small, test a theory or two, and grow from there. With each successive test, you gain valuable knowledge that will turn into higher conversions. Don’t be afraid to start somewhere, and once you begin, just keep testing.

For further information on the various A/B testing methods in Oracle Eloqua, there are a number of excellent articles on Topliners, examples include:

- E10: How to Run A/B Email Testing on the Campaign Canvas

- Building a Random A/B Split Email Testing Program

- Walkthrough of Randomized A/B Email Test in E10 Campaign Canvas

For additional support using Eloqua, please contact us!